CS 180 Project 4 –– [Auto]Stitching Photo Mosaics

Aarav Patel

Overview

In Project 4A, we explore image warping and moasicing. Specifically, we shoot and digitize pictures, recover homographies, warp the images, and finally blend them into a mosaic. In Project 4B, we explore feature matching and autostitching. Specifically, we automatically detect corner features in an image, extract a feature descriptor for each feature point, match the feature descriptors between two images, use RANSAC to compute a homography, and finally blend the images into a mosaic similar to Project 4A.

4A: Recovering Homographies

In order to recover the homography matrix H between two images, I had to define the correspondence points. For this task, I used the tool provided in Project 3. For our system, we only required 4 points to solve through least-squares. However, I used 8 points to yield an over-determined system and protect against instability. I solved the least-squares problem using SVD. I then normalized the solution and returned H.

4B: Detecting corner features in an image

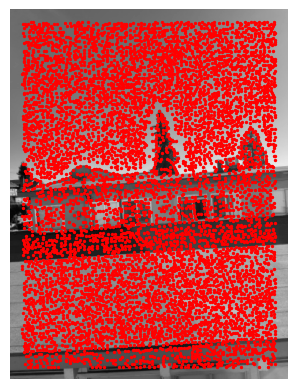

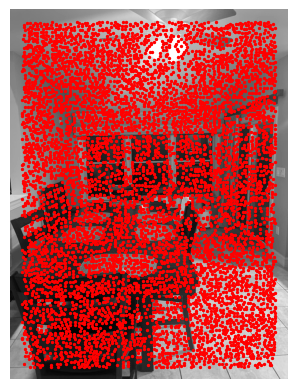

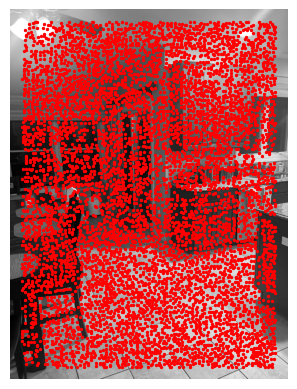

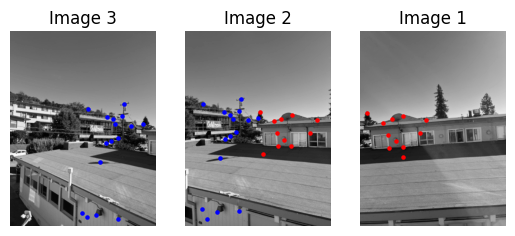

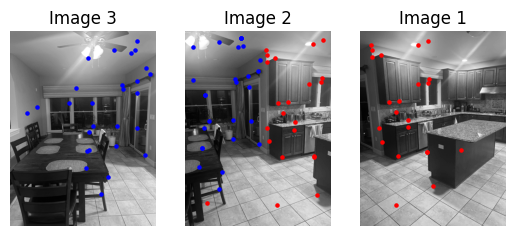

Here, I used the Harris Interest Point Detector to detect the corner points in each image of each set. Here are my results.

Living Room #1, Harris Interest Points

Living Room #2, Harris Interest Points

Living Room #3, Harris Interest Points

Balcony View #1, Harris Interest Points

Balcony View #2, Harris Interest Points

Balcony View #3, Harris Interest Points

Kitchen #1, Harris Interest Points

Kitchen #2, Harris Interest Points

Kitchen #3, Harris Interest Points

I then applied Adaptive Non-Maximal Supression to filter the number of points and ensure they are uniformly distributed over the image.

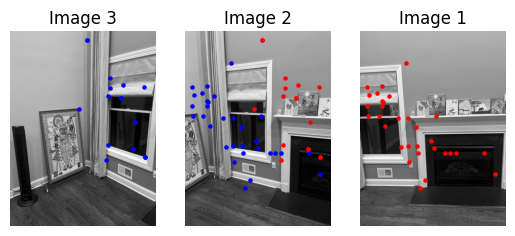

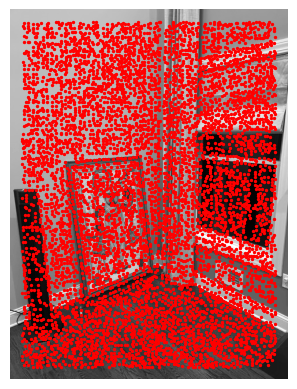

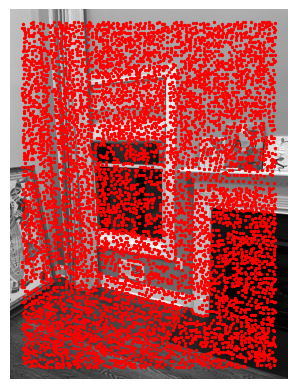

4B: Use a robust method (RANSAC) to compute a homography

Here I implemented 4-point RANSAC as described in class to compute the most likely homography. I then blended the image sets to form mosaics and comapred to the results from Project 4A.

Living Room, 4A, Manual

Living Room, 4B, Automatic

Balcony View, 4A, Manual

Balcony View, 4B, Automatic

Kitchen, 4A, Manual

Kitchen, 4B, Automatic

Overall, the coolest thing I learned from this project was automatic feature matching. It was mind-blowing to see how realtively simple methods can be used to autostitch images together. These methods were developed before the days of deep learning, which I also found very interesting.